The effects of spatial auditory and visual cues on mixed reality remote collaboration

Collaborative Mixed Reality (MR) technologies enable remote people to work together by sharing communication cues intrinsic to face-to-face conversations, such as eye gaze and hand gestures. While the role of visual cues has been investigated in many collaborative MR systems, the use of spatial auditory cues remains underexplored. In this paper, we present an MR remote collaboration system that shares both spatial auditory and visual cues between collaborators to help them complete a search task. Through two user studies in a large office, we found that compared to non-spatialized audio, the spatialized remote expert’s voice and auditory beacons enabled local workers to find small occluded objects with significantly stronger spatial perception. We also found that while the spatial auditory cues could indicate the spatial layout and a general direction to search for the target object, visual head frustum and hand gestures intuitively demonstrated the remote expert’s movements and the position of the target. Integrating visual cues (especially the head frustum) with the spatial auditory cues significantly improved the local worker’s task performance, social presence, and spatial perception of the environment.

Figure: The overview of our MR remote collaboration system: the remote expert uses VR teleportation to virtually move in a 3D virtual replica of the local space. The expert also talks to the local worker and provides spatialized auditory beacons that are perceived via the local worker’s AR headset. When visual cues are enabled, the expert’s hand and head representations will be visible to the local worker. The local worker’s egocentric view is always live shared with the remote expert

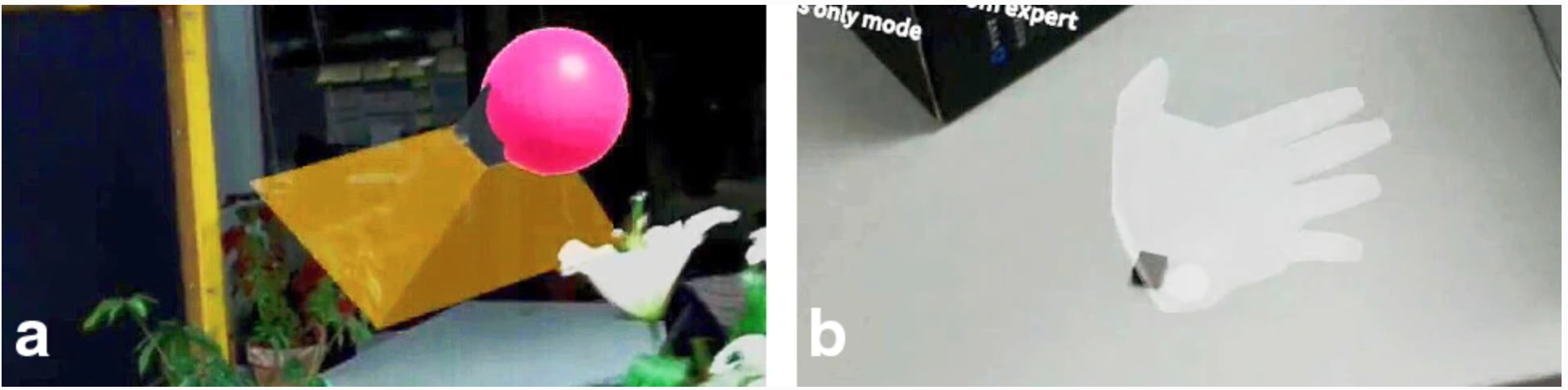

Figure: The illustrations of the head frustum (a) and hand gesture (b) shared from the remote to the local side

Conclusion

In this paper, we presented an MR remote collaboration system that features both spatial auditory and visual cues. We found that the spatial auditory cues could navigate a local worker in a large space and give the worker better spatial awareness in an MR remote collaborative search task. We also found that the visual cues, especially the head frustum, further helped the local workers to complete the search task faster with a better spatial and social experience. The results of our studies also provide some insights about how the local remote conversation might become more interactive and intuitive as well as how the local workers could conduct the task more confidently with the integration of spatial auditory and visual cues. Since visual cues are intuitive to perceive and spatial auditory cues indicate 360∘ directions and distances, the combination of both made some participants feel like they were interacting with a real person.

Read more about the paper here.